Google Stitch: An AI tool for UI design and coding

At Google I/O 2025, the company unveiled Stitch, a new generative AI tool designed to accelerate the transformation of rough user interface (UI) ideas into polished, functional designs — complete with frontend code. Powered by Gemini 2.5 Pro, Stitch is part of an experimental rollout via Google Labs, and it promises to significantly streamline the front-end development process.

From Prompt to Prototype in Minutes

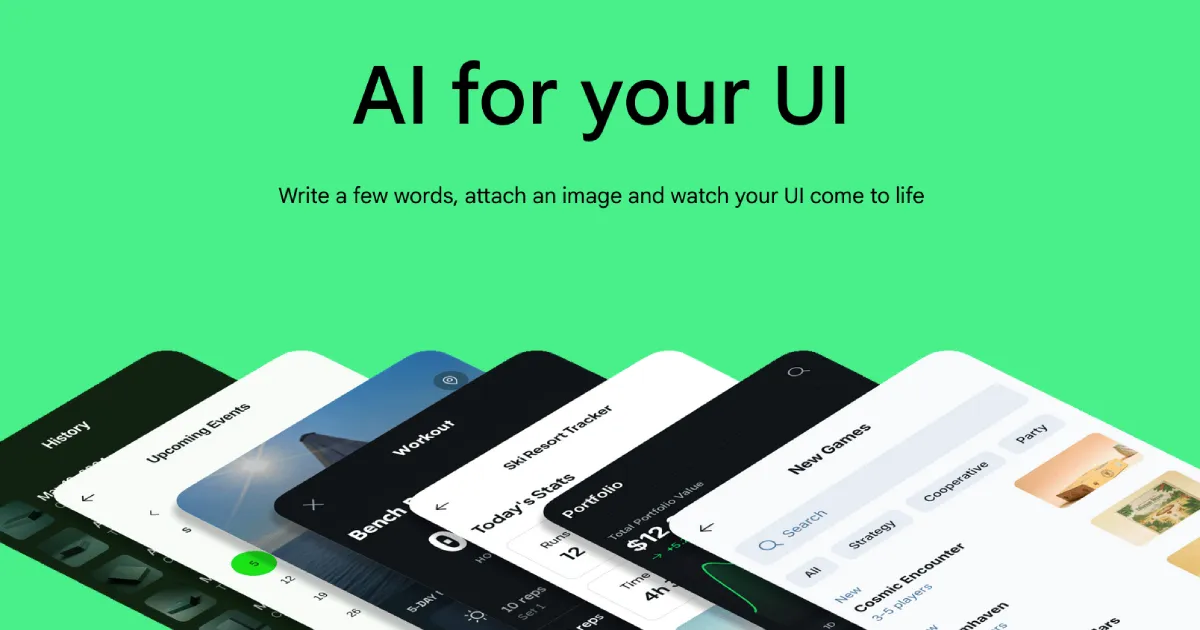

Stitch allows developers to input natural language prompts or visual references — including wireframes, sketches, or screenshots — and receive fully rendered UI designs and corresponding HTML/CSS code in return. According to Google, the tool can produce “complex UI designs and frontend code in minutes,” eliminating the traditionally labor-intensive step of first designing UI components and then manually coding around them.

The tool currently supports English-language input and enables users to guide the design process by specifying visual themes, layout styles, color palettes, and desired user experience elements. The result is a visual interface generated in real time, tailored to both the user’s design vision and coding needs.

Built for Experimentation

One of Stitch’s standout features is its ability to generate multiple interface variants from the same prompt. This allows developers and designers to rapidly test different visual directions before settling on a final layout. Whether prototyping a mobile app or sketching out a dashboard, users can explore a range of aesthetic and functional possibilities without starting from scratch each time.

Integration with Figma and Developer Workflows

In keeping with modern design workflows, Stitch allows generated UI assets and code to be exported directly into Figma, the popular interface design tool. This enables further refinement, system integration, and team collaboration. While Stitch provides the skeleton of the application UI, Figma still serves as the primary platform for pixel-level polish and handoff between design and development teams.

Stitch’s coding capabilities also create some overlap with Figma’s recently announced Make UI tool, which similarly uses generative technology to build front-end components. By integrating robust automatic coding features, Google appears to be positioning Stitch as a complementary — or even competitive — alternative for users already exploring generative design tools.

A Strategic Move in Developer-Centric AI

The introduction of Stitch comes at a time when Google is clearly doubling down on AI-assisted software development. With tools like Gemini Code Assist and now Stitch, Google is expanding its reach across the design-to-code pipeline. Stitch offers a practical way to keep developers — particularly those already within Google’s ecosystem — from migrating to competing platforms as AI continues to reshape development workflows.

Though Stitch is not meant to replace comprehensive design platforms, it’s a powerful new entry in the realm of AI-powered rapid prototyping. For developers juggling both design and implementation tasks, it offers a new kind of efficiency — one that turns vague ideas into working code faster than ever.

As Stitch continues to evolve within Google Labs, it may well redefine how early-stage UI design and development are approached — making the first draft of your next app as simple as typing an idea or uploading a sketch.