Microsoft is testing microfluidic cooling for AI chips

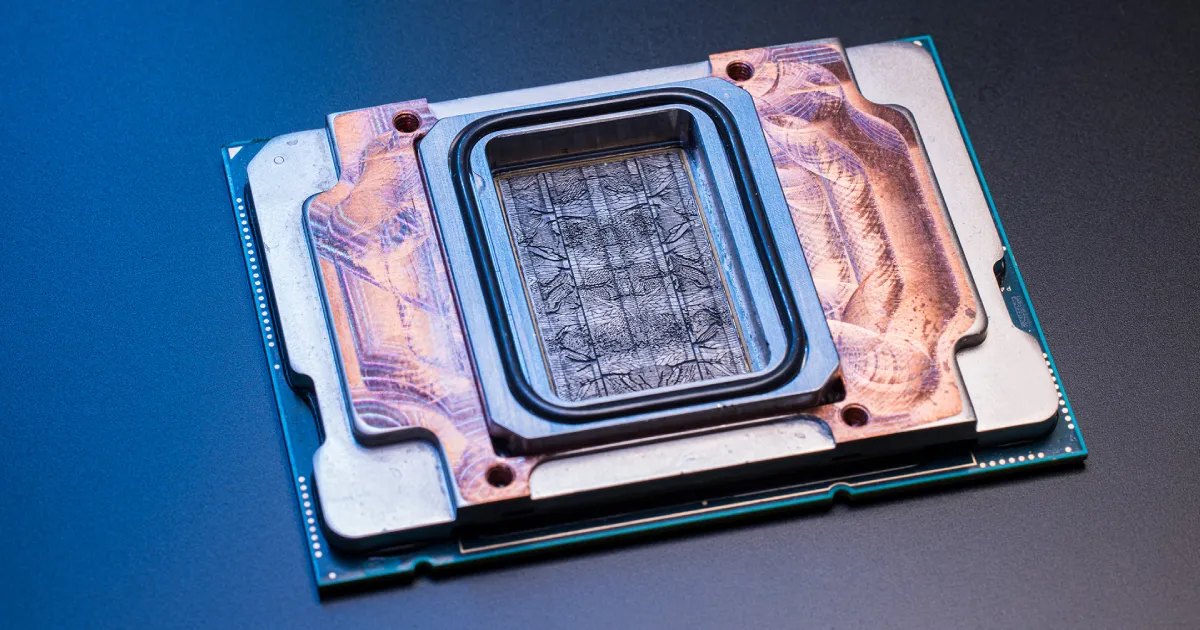

Microsoft Corp. is testing a novel way to tackle one of the biggest energy drains in artificial intelligence data centers: keeping processors cool. The company is experimenting with microfluidics, a technology that channels small amounts of fluid directly through etched pathways in chips to regulate heat.

Husam Alissa, who oversees Microsoft’s systems technology, said the approach has already been applied in prototypes to both server chips powering Office cloud apps and the GPUs that run AI workloads. Because the fluid directly contacts the chips, it can operate effectively at higher temperatures—up to 70°C (158°F)—than conventional cooling systems. Early testing, demonstrated last week at Microsoft’s Redmond campus, showed significant efficiency gains and the potential for stacking chips to boost performance further.

The technique could also enable temporary overclocking, pushing chips past their normal operating limits to handle short-term spikes in demand. For example, Microsoft Teams sees usage peaks at the start of meetings, often at the hour or half-hour mark. Instead of adding more chips, Microsoft could overclock existing ones briefly to manage the surge, said Jim Kleewein, a Microsoft technical fellow.

Microfluidics is part of a broader push to optimize Microsoft’s rapidly expanding data centers, which added more than 2 gigawatts of capacity in the past year. “When you are operating at that scale, efficiency is very important,” said Rani Borkar, vice president for hardware systems and infrastructure at Azure.

Beyond cooling, Microsoft is also advancing other experimental hardware efforts. The company is deploying hollow-core fiber networking, which uses air instead of glass to transmit data, in collaboration with Corning Inc. and Heraeus Covantics. The lightweight material, just inches long, can stretch to connect several kilometers, significantly boosting transmission speeds.

Meanwhile, Microsoft is exploring custom designs for memory chips, though details remain under wraps. High-bandwidth memory (HBM), a critical component for AI, is currently sourced from suppliers such as Micron Technology. Borkar, who oversees Microsoft’s Maia AI chip, described HBM as “the end-all and be-all” of today’s AI computing but added that the company is evaluating what comes next.

By investing in microfluidics, networking, and memory innovation, Microsoft aims to reduce energy demands while building faster, more powerful infrastructure for AI—a critical move as global demand for data and computing capacity continues to soar.